Why High-Volume Data Systems Must Be Designed for Human Confidence

This article is written for teams building healthtech automation systems at scale, where correctness alone is not sufficient.

TL;DR

In large-scale health-tech automation, technical accuracy of a solution alone doesn’t guarantee success and adoption. Automation must be explainable to the people who operate it.

A system can apply every rule deterministically and still fail in practice if it keeps resurfacing the same edge cases or hides changes from its users.

What matters at scale is confidence, not just correctness. Confidence comes when automation:

- Persists prior human decisions as part of its logic

- Makes every automated change observable and explainable

- Reduces repeated cognitive load so humans can safely step out of the loop

Our argument is simple: automation that ignores trust will collapse under its own volume. Automation that designs for trust will scale, sustain adoption, and actually deliver the value it promises.

Why a Technically Correct System May Fail Operationally

One of our engagements involved designing a physician data ingestion and reconciliation system that processed large datasets uploaded on a recurring basis.

From an architectural standpoint, the system was sound. Incoming files were compared against a cloned snapshot of the production database. Deterministic rules detected valid changes, applied updates automatically, and surfaced only a subset of records for human review.

From a correctness perspective, the system behaved exactly as designed.

The issue appeared over time.

Most uploads were largely identical from run to run, with only a small fraction of records changing. Many edge cases had already been reviewed and accepted in earlier uploads. But because those human decisions were not persisted as part of the system’s logic, the same conditions kept resurfacing. Accepted missing fields and known non-issues were flagged again.

From the user’s perspective, this was confusing.

If a system repeatedly asks for the same decisions, it does not feel automated. It feels incomplete.

At the same time, many changes were being applied automatically in the background, with no clear way to review what had changed or why.

The system was correct.

But it did not inspire confidence.

Why correctness wasn’t enough at scale

In most enterprise ETL systems, if the rules run and data integrity holds, the system is considered “correct.” At small volumes, that definition works.

At scale, it breaks down.

When systems process tens of thousands of records per upload, operators cannot manually revalidate decisions. To rely on automation, they need to see what changed, understand why it changed, and trust that the same decision will not be asked twice.

Without that visibility and continuity, teams fall back to manual checks—even when the system is technically correct.

At that point, automation stops delivering value.

Trust is a system design problem, not a UI problem

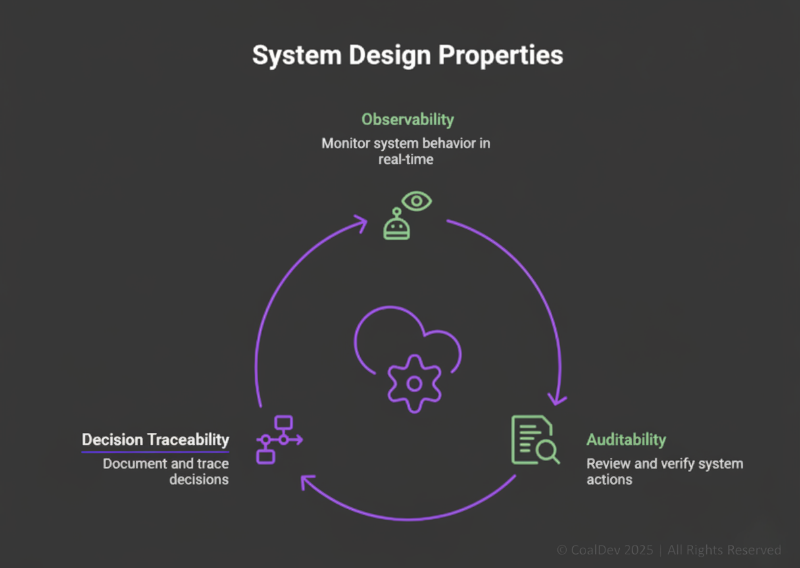

In high-volume ETL systems, automation only works when humans can step out of routine, deterministic decisions and still trust the system’s outcomes. That trust doesn’t come from better screens or clearer buttons. It comes from how decisions are modeled, remembered, and exposed by the system itself.

This shifts the design goal.

It’s about ensuring the system remembers prior decisions, makes its changes visible, and doesn’t force humans to repeatedly re-validate the same outcomes.

Three principles that actually fixed it

1. Compare Against System State

Automation decisions should be based on the current authoritative system state, not prior input files. This ensures consistency and avoids cascading errors when files are replayed, reordered, or partially duplicated.

2. Persist Human Decisions as First-Class Signals

When a human reviews and accepts a condition, that decision should be stored as part of the system’s decision logic.

If the same condition reappears, the system should not ask again.

Automation that does not remember prior decisions is functionally incomplete.

3. Design for Post-Decision Visibility

Not all automated changes require approval. However, all automated changes should be reviewable.

At a minimum, systems should expose:

- Previous value

- Updated value

- Rule or condition that triggered the change

This provides auditability and verification without reintroducing manual review into the critical path.

Net Net

Automation initiatives fail when they are designed only for correctness.

They succeed when trust and visibility are addressed at the system design stage.

That requires engineers to think beyond rules and pipelines and account for:

- Which decisions should never be repeated?

- What visibility allows operators to rely on outcomes

- What guarantees let humans step out of the loop safely

Correctness makes automation possible. Confidence makes it sustainable and also helps in adoption. If people have to keep checking your system, they don’t trust it. And if they don’t trust it, automation stops being automation.